OFFICE OF ADVANCED SIMULATION AND COMPUTING AND INSTITUTIONAL R&D PROGRAMS (NA-114)

Quarterly Highlights | Volume 4, Issue 3 | July 2021

In This Issue

LANL uses machine learning to characterize plutonium impurities.

LLNL’s 2021 AAR process benefits significantly from 3D baselines.

Sandia develops a new calibration and uncertainty propagation method.

Sandia builds credibility for simulations of electromagnetic pulse environments.

LANL predicts strength changes in metals due to helium bubbles.

High GPU performance achieved by the LLNL Cheetah high explosive model.

Fragmentation and material failure modeling for stockpile stewardship is accelerated 20x.

Sandia’s Next-Generation Simulation Project.

LLNL researchers analyze near-surface nuclear detonations to better predict potential damage.

Intentional “Imperfections”: LANL researchers discover new capability for advanced manufacturing.

ASC & LDRD Community—Upcoming Events (at time of publication)

- Virtual Machine Learning for Industry Forum; August 10-12 (https://ml4i.llnl.gov/)

- ASC Virtual Technical Seminar: Using Machine Learning for Radiographic Analysis and Identification of Material Fracture, K. Lewis (LLNL); August 17

- ASC Virtual Technical Seminar: Tri-Lab Computing Environment - A Collaboration for Tri-Lab HPC Users, M. Legendre (LLNL); August 31

- Stewardship Capability Delivery Schedule Virtual Mini-Summit; September 8-9

- V&V Workshop (hosted by LANL); October 19-21

- Predictive Engineering Science Panel (PESP) meeting at SNL-CA; week of October 25th

- Predictive Science Panel (PSP) Meeting at LANL; November 1-5

- Supercomputing 2021, St. Louis, MO (with hybrid virtual sessions); November 14–19

Questions? Comments? Contact Us.

Welcome to the third 2021 issue of the NA-114 newsletter - published quarterly to socialize the impactful work being performed by the NNSA laboratories and our other partners. This issue begins with a highlight from the Los Alamos National Laboratory (LANL) in which researchers are using a combination of Laser Induced Breakdown Spectroscopy (LIBS) experimental data along with development of machine learning software to provide rapid detection of impurities which may facilitate faster plutonium (Pu) characterization and accelerate pit production. Also highlighted in this issue is Lawrence Livermore National Laboratory’s (LLNL’s) computational simulation capability on the Sierra system supporting the 2021 Annual Assessment Report (AAR) process, with high fidelity 3D primary simulation emerging as a standard tool of choice for the laboratory’s AAR community. Other highlights include:

- LLNL’s release of release of MARBL v1.0, a next-generation multiphysics code for stockpile stewardship.

- Efforts at Sandia National Laboratories (SNL) developing a new calibration and uncertainty propagation method that addresses the lack of experimental calibration data common in science and engineering applications.

- LANL’s predictions of strength changes in metals due to helium bubbles formed by irradiation.

- SNL’s efforts in building credibility for simulations of electromagnetic pulse environments relevant to future weapon systems.

- LLNL’s optimization of the Cheetah high explosive (HE) model for graphics processing unit (GPU)-based computing system architectures demonstrating up to 15x speed-up, which will benefit overall productivity for stockpile stewardship calculations.

Please join me in congratulating the dedicated professionals who delivered the achievements highlighted in this newsletter, in support of our national security mission.

Thuc Hoang

NA-114 Office Director

LANL uses machine learning to characterize plutonium impurities and accelerate pit production.

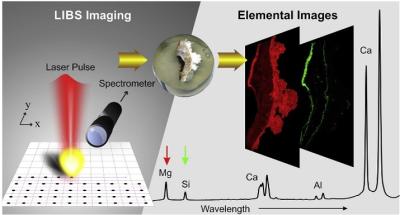

Laser Induced Breakdown Spectroscopy (LIBS) is a fast analytical technique that can quickly characterize the elemental composition of a target sample. LIBS operates by producing a focused laser pulse that transforms less than a microgram of the sample surface into a plasma. Within that plasma, atoms and ions are excited to higher energy states. The excited species emit photons with characteristic wavelengths that are indicative of the chemical elements that are present in the sample. LIBS probes this “atomic fingerprint” of the target material. LIBS is a rapid and versatile tool used in a wide range of applications in the space, steel, aluminum, energy, pharmaceutical, building, and waste management industries. LANL has a unique expertise with this technique and developed instruments on the Mars Curiosity Rover (ChemCam) and the Perseverance Rover (SuperCam) that use LIBS for geological characterization.

Traditional methods for characterizing the plutonium (Pu) used in pits rely on analytical chemistry. This can take weeks of time because of space limitations inside radiologically-hot gloveboxes and the difficulty in packaging and transporting samples from these gloveboxes to a separate facility. Initial work has shown that LIBS may be useful in rapid identification of impurities in bulk metal samples during manufacturing processes at the LANL Plutonium Facility. If this project proves successful, it has the potential to decrease analysis times from weeks to just a few minutes.

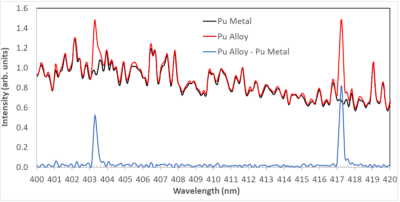

The actinide metals used in manufacturing efforts have extremely complex emission spectra that contain potentially tens of thousands of spectral lines. A snapshot of these emission lines from a LIBS measurement on two Pu samples (one pure metal and one alloy) over a small wavelength region is shown in Figure 2. While the many emission lines from Pu dominate the spectrum, it is possible to identify two prominent lines from gallium (Ga), an important impurity in the alloy. While in this example the Ga lines are quite evident, this is often not the case for smaller impurity amounts. To reliably and efficiently determine if smaller impurity amounts may be present in a LIBS sample, a LANL team is developing machine learning (ML) software (based on convolutional neural networks) to provide rapid detection of such impurities and to quantify impurity amounts. The ML tools train on data taken in complementary experimental work funded by the LANL Pu Sustainment Program. The ML software will then be able to assess a newly measured dataset and compare to its training sets, reporting its assessment (including a confidence limit) of the presence of impurities and (ideally) their concentration. Preliminary results indicate that impurities of Ga can be identified and quantified. Other elemental impurities, such as Fe, have also been identified. (LA-UR-21-25693)

LLNL’s 2021 AAR process benefits significantly from 3D baselines.

LLNL’s 2021 Annual Assessment Report (AAR) process is benefitting significantly from ASC support. This year, LLNL’s computational simulation capability on the Sierra High Performance Computing (HPC) system has been key to success.

Taking advantage of the performance gains of Sierra, a 3-dimensional (3D) primary baseline incorporating several above ground and underground tests was developed for one of the weapons systems. Although 3D simulation has played an important role for years in AAR assessments, this is the first time a 3D baseline is being used to make the primary assessment.

Another system has moved its models to standard meshing templates, allowing them to address a high-priority 3D physics issue within the system. High fidelity 3D primary simulation is emerging as a standard tool of choice for LLNL’s AAR community. All three systems continue to make progress in developing full system models taking advantage of recent work done by LLNL’s ASC code teams to establish this capability. Finally, ASC integrated code project leaders served on the internal physics review committee, providing valuable feedback to the AAR teams about the finer details of using the codes.

ASC staff participating in the AAR process is invaluable as a mechanism to share information about code requirements between the physics design and integrated code development communities. (LLNL-ABS-824663)

LLNL ASC team announces release of MARBL v1.0, a next generation multiphysics code for stockpile stewardship.

LLNL’s Multiphysics on Advanced Platforms Project (MAPP), which is part of the ASC Advanced Technology Development and Mitigation (ATDM) NextGen Code Development Program, proudly announced the release of MARBL v1.0 on the Livermore Computing systems. MARBL is a next generation multiphysics code based on high-order numerical algorithms and modular computer science infrastructure. While LLNL has been steadily releasing alpha versions of MARBL to early adopters, the v1.0 release that occurred on March 19th, 2021 is the first production release of this code. Recently, the MAPP team demonstrated MARBL’s capabilities on a variety of programmatically relevant applications with excellent computational performance on the Advanced Technology System (ATS) Sierra, the Advanced Architecture Prototype System (AAPS) Astra, and Commodity Technology System (CTS) architectures. This work resulted in a highly successful L1 milestone report, delivered in December 2020.

MARBL’s early focus on robust infrastructure has played a significant role in this success, especially during the ongoing COVID pandemic, where the majority of code development has taken place remotely. MARBL’s design centers around its modular Computer Science, Physics, and Math infrastructure, much of which is developed and released as open-source software. Its focus on rapid iteration, in-depth code reviews, and modern software quality assurance (SQA) practices, such as continuous integration, regression testing, and documentation, has greatly improved developer productivity. Similarly, its flexible problem setup and streamlined workflows has helped users rapidly set up problems and work effectively with tools in the high performance computing (HPC) ecosystem to interrogate their results. MARBL’s focus on enhanced end-user productivity leads to faster user turnaround for multiphysics simulations on advanced architectures and less manual user intervention.

MARBL addresses the modeling needs of the high-energy density physics (HEDP) community for simulating high-explosive, magnetic, or laser driven experiments such as inertial confinement fusion (ICF), pulsed-power magneto-hydrodynamics (MHD), equation of state (EOS), and material strength studies as part of the NNSA Stockpile Stewardship Program (SSP). LLNL is actively investigating means of incorporating MARBL into emerging digital engineering workflows, such as those based on ML and design optimization.

(LLNL-ABS-824676)

Sandia develops a new calibration and uncertainty propagation method that addresses the lack of experimental calibration data common in science and engineering applications.

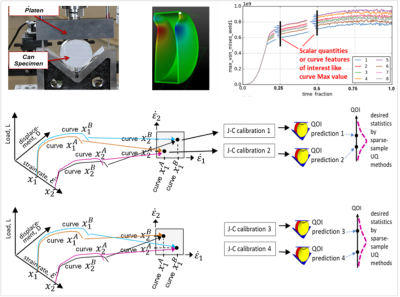

A newly developed “Discrete Direct” (DD) sparse-sample model calibration and uncertainty propagation method has been successfully used to process experimental results into reliably conservative and efficient bounding estimates of desired response statistics for Quantification of Margins and Uncertainty (QMU) analysis. The methodology addresses a major difficulty caused by a lack of experimental calibration data in many science and engineering circumstances. The figure on the left summarizes an optimized DD approach that averages statistical estimates of response based on equally legitimate calibration parameter sets from combinations of the experimental calibration data. The new methodology has been confirmed on a rigorous solid-mechanics test problem recently published in an American Society of Mechanical Engineers (ASME) journal paper. Currently, it is being applied to weld modeling, structural dynamics bolted-joint parameter estimation, and radiation-damaged electronics QMU applications.

(SAND2021-6594 O)

ASC researchers at Sandia build credibility for simulations of electromagnetic pulse environments relevant to future weapon systems.

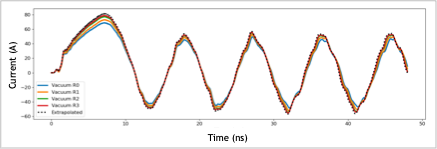

SNL’s Electromagnetic Plasma In Radiation Environments (EMPIRE) code team is in the process of generating the credibility evidence necessary to establish EMPIRE as a qualification tool for the W87-1 life extension program as well as for future systems such as the Next Navy Warhead (the W93). Using the Sierra HPC system at LLNL, the team completed a convergence study of a simulation of an X-ray driven cavity experiment fielded at the National Ignition Facility (NIF), using 1.6 billion elements and 3.4 billion particles at the highest resolution), which demonstrated that the key quantity of interest (the current on one of the cables, shown in Figure 5), is converging at an approximately first-order rate. This is the first step in assessing the numerical error in this simulation so that it can be validated with the NIF experimental data and ultimately generate the credibility evidence needed for highly-stringent weapons applications.

(SAND2021-6815 O)

LANL predicts strength changes in metals due to helium bubbles formed by irradiation.

The strength of a material is a measure of its resistance to changes in shape. Strength is important in a wide variety of national security applications spanning the range from conventional shape charges to performance of the nuclear stockpile. At low pressures the strength can be directly measured up to deformation rates as high as 500,000 per second. To put this in perspective, at the upper end of this range of deformation rates, a penny would be squished to one half its thickness in a millionth of a second. However, strength cannot be directly measured at large confining pressures and must be inferred through inverse modeling combined with other assumptions. In addition, strengths at especially high strain rates remain beyond the reach of direct measurement. For these combinations of material response, we depend on models to extrapolate from observable conditions to those that occur in real physical systems. To constrain the extrapolation, we use information from molecular dynamics simulations that describe the fundamental processes important for strength.

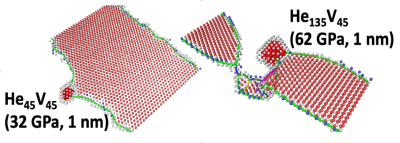

LANL scientists recently conducted over a million central processing unit (CPU)-hours of calculations to build a database of the effect of helium bubbles on characteristics of the strength governed by dislocation mobility. Helium bubbles are caused by irradiation and can occur in natural aging of radioactive materials (such as plutonium) or when materials are placed in the presence of radioactive materials. The simulations included a domain of approximately 2 million atoms with a cluster of atomic vacancies (i.e. missing atoms) and additional helium atoms. Each particular combination of vacancies and helium atoms in the cluster defines a specific bubble size and internal pressure. For example, a cluster of 45 vacancies filled with 135 helium atoms leads to an internal pressure of 62 gigapascals (GPa) and a bubble size of approximately one nanometer. The results from this suite of calculations have been analyzed to identify the critical stress required to move a dislocation past the helium bubble.

This data will be used to parameterize a physics-based model representing the change of strength associated with the evolution of lattice defects caused by aging under important loading conditions that are inaccessible by conventional experiments. (LA-UR-21-25060)

High GPU performance achieved by the LLNL Cheetah high explosive model enables large weapon simulations.

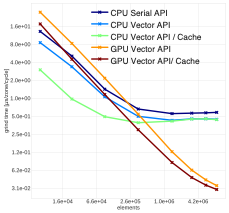

The Cheetah high explosive (HE) code operates within the ARES and ALE3D multiphysics codes to offer advanced high explosive equation of state and burn kinetic models. The code is based on advanced on-demand physics algorithms.

Chemical equilibrium calculations are stored in a sparse EOS database that is used to answer future queries and reduce the number of chemical equilibrium solutions that need to be executed. Recent efforts to optimize Cheetah for GPU architectures has greatly enhanced performance for large zone-count calculations, demonstrating up to a factor of 15 speed-up, which will benefit overall productivity for calculations of interest to the Weapons Program.

There were several important steps to achieving GPU performance with Cheetah. The establishment of a vector interface, and re-writing significant portions of the code to process vector loops, was a necessary step to achieving performance. Once the vector application programming interface (API) was established, however, further modifications to the code were necessary. All memory allocations were put under the control of the LLNL Umpire library, which significantly improved the efficiency of temporary memory allocations. All loops handling vectors of zones were dispatched to the GPU by the LLNL RAJA abstraction library. It was found that matrix memory access was more efficient with a column-oriented packing scheme, while a row-oriented memory access was more efficient on CPU-based platforms. This was handled by developing a C++ abstraction layer that allowed compile-time control over memory layouts so that code changes necessary for GPUs did not have adverse effects for CPU-based systems. Finally, in-depth analysis of critical database interpolation loops at the assembly code level was necessary to identify memory access bottlenecks. Changes were made to reduce register pressure and conversion of critical functions to C++ templates, allowing for compile-time short loop elimination.

The code work was presented as part of a Physics and Engineering Model (PEM) Level 2 Milestone. According to the milestone review committee, “The GPU results for Cheetah and MSLib in particular surpassed the committee's expectations, given the relative complexity of those codes.” (LLNL-ABS-824666)

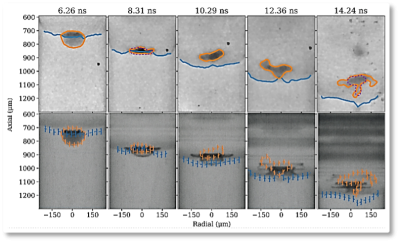

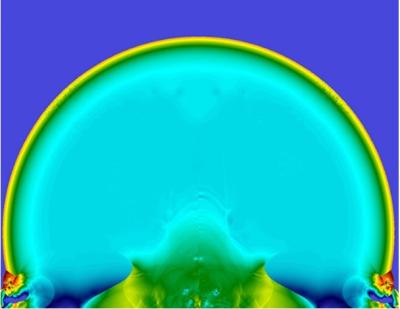

LANL’s new “Quiet Start” method resolves longstanding discrepancy in simulations of high-energy density physics experiments.

“Quiet start” prevents spurious interface motion in HEDP/ICF simulations when materials are solid but have different pressures due to the initial conditions. For cylindrical problems, users will typically perform simulations that force pressure equilibrium between materials in the initial conditions to avoid spurious interface motion. Nevertheless, LANL found that this was the source of long-standing discrepancies in shock speeds through foams between simulation and experiment. By implementing the new cylindrical quiet start method, LANL can use accurate initial conditions in the simulations. As a result, researchers are able to match shock speed and void behavior throughout MARBLE void collapse experiments to measure shock-bubble interactions in the HEDP regime for the first time. It is notable that by using University of Rochester’s Laboratory for Laser Energetics’ inline cross-beam energy transfer modeling, simulations were performed without any adjustable parameters. This is the first time such remarkable agreement between simulation and experiment has been demonstrated for cylindrical targets without using multipliers to improve agreement with experiment. (LA-UR-21-24580)

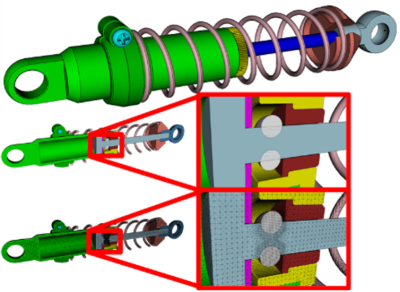

SNL’s Next-Generation Simulation Project aims to reduce turn-around-time, improve credibility, and accessibility needed for virtual design and qualification by enabling fast learning and decision cycles based on modeling and simulation.

Drastically reducing the time to complete a modernization program requires enabling fast learning and decision cycles based on modeling and simulation (ModSim). The Next Generation Simulation (NGS) project at Sandia has demonstrated the capability to dramatically reduce turn-around-time for design decision analyses. NGS was recently applied to the design of an existing actuator pulsed battery assembly (APBA) being considered in a new environment. Using Morph, NGS’s novel automatic meshing capability, Sandia researchers demonstrated the ability to refine the mesh automatically around sub-components tagged by an analyst within a complex assembly (see generic example in Figure 11). Reduced turn-around-time was achieved through Morph’s ability to generate tetrahedral meshes on CAD drawings, reducing and often eliminating the need for analysts to manually remove volume overlaps, gaps, and small features. This demonstration is the required first step in set-up time reduction for simulation-based design decisions. Further work is in progress to assess different designs in various environments for fast cycles of learning. (SAND2021-5252 O)

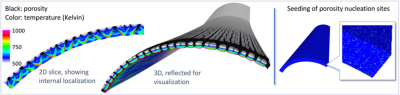

Fragmentation and material failure modeling for stockpile stewardship is accelerated 20x by the GPU port of LLNL ASC-PEM and ASC-IC program capabilities.

In scenarios such as explosive safety, there is significant interest in modeling material failure and fragmentation. In a collaborative effort across Physics and Engineering Models (PEM) and Integrated Codes (IC) activities within ASC, models have been developed to enhance physical fidelity of fragmentation simulations, and capabilities increased dramatically with the recent port of the implementations to GPU-based computing platforms. The simulations make use of a porosity-based constitutive model and a novel non-local approach to mitigate the mesh dependence seen in classical approaches. Both aspects of the new approach are computationally expensive and have been recently ported to GPUs, with a roughly 20X node-to-node speedup on the Sierra computing architecture compared to the CTS-1 architecture. The modeling approach introduces a length scale, and this length scale and the porosity nucleation behavior can be associated with material microstructure and thus with manufacturing variations. This work supports two FY21 ASC milestones related to GPU utilization. (LLNL-ABS-824662)

LLNL researchers analyze near-surface nuclear detonations to better predict potential damage.

An LDRD-funded research team at LLNL is taking a closer look at nuclear weapon blast effects close to the Earth’s surface.

Previous efforts to correlate data from events with low heights of burst revealed a need to improve the theoretical treatment of strong blast waves rebounding from hard surfaces. Investigators compared theory with numerical simulations of different nuclear yields, heights of burst, and ambient air densities. They focused on nuclear blasts in non-ideal environments, such as blasts over mountainous terrain, or in the presence of rain or snow. These types of environments change the blast wave in operationally significant ways.

Results of their high-fidelity blast simulations indicate that the shock wave produced by a nuclear detonation continues to follow a fundamental scaling law when reflected from a surface. These findings enable investigators to more accurately predict the damage a detonation will produce in a variety of situations, including urban environments. “Having the capability to accurately predict the damage of a high-yield device in a wide array of cases is of paramount interest to our national security,” said LLNL scientist Greg Spriggs. “This information enables us to pre-compute potential damage and guide emergency response personnel in the event that the United States is attacked or in case of a catastrophic accident.” This latest research spawned from decades of data collected during LLNL’s work scanning and analyzing films of old atmospheric tests. “The more we know about the effects of nuclear detonations in different environments, the better prepared we will be to respond,” Spriggs said.

More information about research related to near-surface nuclear detonations can be found on LLNL’s website. (LLNL-WEB-458451)

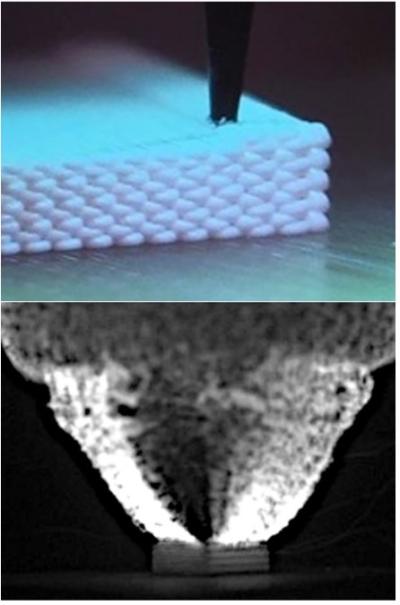

Intentional “Imperfections”: LANL researchers discover new capability for advanced manufacturing.

A long-standing challenge in explosives research is the development of a material that is “just right.” The material should not be too sensitive and potentially detonate unintentionally, but should also not be too insensitive and never detonate. Using advanced manufacturing techniques, researchers at LANL have developed a process to produce explosive materials with tailored sensitivity.

In trinitrotoluene or TNT, perhaps the most well-known example of an explosive, hot spots control the dynamic behavior of the material. These hot spots often form around defects, such as air bubbles or inclusions, during the manufacture of the explosive. The uneven flow into and around these imperfections results in points of intense heat that largely control the energy necessary to initiate detonation.

The key insight for the LANL team in tailoring the sensitivity of explosives was the application of advanced manufacturing techniques to intentionally introduce voids within a fabricated material. Advanced manufacturing techniques not only allow the material to be manipulated at the mesoscale, but also eliminate variables and unintentional inclusions. With the ability to control the placement of voids within a material, the team looks to control the release of energy through a sophisticated arrangement of hot spots. The team demonstrated that the structure introduced can be used to manipulate both the detonation direction and detonation timing in a manufactured sample.

The work of the team has broad impact in detonation physics research. Following the conclusion of their LDRD project, the team found applications within the NA-115 Advanced Manufacturing Development Program mission space.

Principle investigator Alexander Mueller describes: “This work ideally will make HE materials safer and make their energy delivery more precise. Due to a precise application of HE power, this work will have implications in what technologies these material can be applied to; worker safety in industry and military that handle and use these materials, and hopefully, when developed to a high level, a reduction of unintended casualties from conventional weapons. It will also allow for weapon designers to explore new concepts, due to both the new effects that can now be used to manipulate the dynamic behavior of the HE and the agility gained by reducing the design-manufacture-test cycle through the use of these techniques.” (LA-UR-21-23577)

Questions? Comments? Contact Us.

NA-114 Office Director: Thuc Hoang, 202-586-7050

- Integrated Codes: Jim Peltz, 202-586-7564

- Physics and Engineering Models/LDRD: Anthony Lewis, 202-287-6367

- Verification and Validation: David Etim, 202-586-8081

- Computational Systems and Software Environment: Si Hammond, 202-586-5748

- Facility Operations and User Support: K. Mike Lang, 301-903-0240

- Advanced Technology Development and Mitigation: Thuc Hoang