OFFICE OF ADVANCED SIMULATION AND COMPUTING AND INSTITUTIONAL R&D PROGRAMS (NA-114)

Quarterly Highlights | Volume 5, Issue 1 | January 2022

In This Issue

LLNL, LANL, and SNL support organization and DOE-wide booth at SC21 in St. Louis

LANL, SNL, and LLNL recipients of the R&D 100 Awards unveiled

Improved evaluations for Pu at LANL incorporate experimental, theoretical advances

SNL enables W80-4 normal thermal qualification simulations on Sierra

State-of-the-art LANL HPC simulations unravel mysteries of nuclear fission process dynamics

SNL prototypes Engineering Common Model Framework , applies it to the B83 system

SNL LDRD-supported 3D-imaging workflow aids medicine, electric cars, nuclear deterrence

ASC & LDRD Community—Upcoming Events (at time of publication)

- JOWOG34 Applied Computer Science Meeting hosted by LANL (multi-site SVTC with LANL, LLNL, SNL, HQ, and AWE); February 28 – March 4

- Virtual Stewardship Capability Delivery Schedule (SCDS) Full Summit; March 2-3

- Virtual Exascale Computing Project Independent Project Review; March 15-17

- Predictive Science Panel (PSP) Meeting at LANL; March 21-25

- NA-11 Budget Summit; week of March 28th

- ECP “All Hands” Meeting in Kansas City, MO; May 2-5

- Predictive Engineering Science Panel (PESP)

- Meeting at SNL-NM, April 11-14

- ASC Principal Investigators Meeting,

- Monterey Hyatt/Naval Postgraduate School in Monterey, CA; May 16-19

Questions? Comments? Contact Us.

Welcome to the first 2022 issue of the NA-114 newsletter - published quarterly to socialize the impactful work being performed by the NNSA laboratories and our other partners. This issue begins with a hearty “thank you” to the NNSA laboratory staff that supported the 2021 Supercomputing Conference (SC21) program and DOE/NNSA booth this past November, which were especially challenging due to the hybrid conference format and COVID-19 restrictions. Also highlighted in this issue are the Los Alamos National Laboratory (LANL), Sandia National Laboratories (SNL), and Lawrence Livermore National Laboratory (LLNL) recipients of the R&D 100 Awards unveiled this past year, given in recognition of exceptional new products or processes developed and introduced into the marketplace. Other highlights include:

- LANL’s improved evaluations for plutonium which incorporate recent experimental and theoretical advances.

- LLNL ASC Physics and Engineering Models (PEM) molecular dynamics simulations that enhance our understanding of insensitive high explosives modeling for stockpile performance and safety analysis.

- Sandia’s W80-4 normal thermal qualification simulations run on the ASC Sierra system.

- Sandia’s development of a data-driven model of porous metal failure to help designers better understand reliability of additively manufactured parts for stockpile stewardship.

- LANL’s progress using HPC simulations to unravel mysteries of nuclear fission process dynamics (one such simulation of a heavy nucleus (236U) on its way to fission is shown in the banner image above).

Please join me in thanking the tri-lab staff who had worked tirelessly for SC21 and congratulating the dedicated professionals who delivered the achievements highlighted in this newsletter, in support of our national security mission.

Thuc Hoang

NA-114 Office Director

LLNL, LANL, and SNL staff supported the Supercomputing 2021 Conference program and DOE-wide booth in St. Louis this past November.

The ASC HQ program office extends its thanks to the primary organizers of the SC’21 program, committees, and DOE-wide booth.

The ASC HQ program office thanks LLNL’s Bronis de Supinski , General Chair for SC21 and Lori Diachin, Virtual Logistics Chair for the SC21 Virtual Program for their lead roles in this year’s hybrid conference (with proceedings delivered both in-person and virtually), as well as LANL’s John Patchett, Dave Modl, and Ethan Stam for their impressive booth organization efforts. Other members of the SC21 Executive Committee conducted extensive planning work that contributed immensely to the success of the conference, including Michele Bianchini-Gunn (LLNL), Executive Assistant; Candy Culhane (LANL), Infrastructure Chair; Todd Gamblin (LLNL), Tech Program Vice Chair; Lance Hutchinson (SNL), SCinet Chair; and Jay Lofstead (SNL), Students@SC Chair. The full list of SC21 planning committee members is available online here.

The DOE-wide booth hosted 12 live hybrid featured talks and 13 technical demonstrations broadcast in the booth and via Webex. John Patchett and Ethan Stam managed all of these hybrid demonstrations on the show floor. Dave Modl and Dave Rich (LANL) served on the Booth Committee. Dave Modl also served as the live Booth Manager during the conference. Deanna Willis (LLNL) served on the SC21 Program Committee and helped organize all of the presenters and their time slots. Meg Epperly (LLNL), Ethan Stam, and Nicole Lucero (SNL) served on the Communications Committee. This team also assisted in developing the https://scdoe.info website, planned and managed social media during the show, and created in-booth digital signage. (DOE/NA-0127)

- Gregory Becker (LLNL), Virtual Logistics Tutorials Content Liaison

- Janine Bennett (SNL), Finance Liaison for Tech Program

- Adam Bertsch (LLNL), SCinet Network Security Team Co-Chair

- Stephanie Brink (LLNL), Student Cluster Competition Vice Chair

- Jill Dougherty (LLNL), Wayfinding Chair

- Maya Gokhale (LLNL), Tech Papers Architecture & Networks Area Chair

- Elsa Gonsiorowski (LLNL),Tech Papers System Software Area Vice Chair

- Annette Kitajima (SNL), SCinet Fiber Team Co-Chair

- Julie Locke (LANL), SCinet Fiber Team Co-Chair

- Clea Marples (LLNL), Virtual Logistics Operations Lead

- Kathryn Mohror (LLNL), Special Events Chair

- Scott Pakin (LANL), Student Networking and Mentorship Chair

- Wendy Poole (LANL), Families@SC Chair

- Heather Quinn (LANL), Inclusivity Deputy Chair/Virtual Logistics, Inclusivity Liaison

- Elaine Raybourn (SNL), Scientific Visualization Posters Chair

- Kathleen Shoga (LLNL), Student Cluster Competition Chair

- Jeremy Thomas (LLNL), Reporter

- Jamie Van Randwyk (LLNL), Space Chair

- SCinet Network Security Team Members: Kristen Beneduce (SNL), Jason Hudson (LLNL), and Thomas Kroeger (SNL)

- Melinda DeHerrera (LANL), SCinet Fiber Team Member

- Rigoberto Moreno Delgado (LLNL), Student Cluster Competition Committee

- Stephen Herbein (formerly of LLNL), Mobile Application Chair and the Students@SC Alumni Event Chair

LANL, SNL, and LLNL recipients of the R&D 100 Awards unveiled in the fall of 2021.

ASC and LDRD-funded technologies received R&D 100 Awards, given in recognition of exceptional new products or processes developed and introduced into the marketplace in the previous year.

The R&D 100 Awards are selected by an independent panel of judges based on the technical significance, uniqueness, and usefulness of projects and technologies from across industry, government, and academia. These awards have long been a benchmark of excellence for industry sectors as diverse as telecommunications, high-energy physics, software, manufacturing, and biotechnology.

LLNL ASC program researchers garnered an award for their Flux next-generation workload management software. Developed by LLNL computer scientists in collaboration with the University of Tennessee, Knoxville for the ASC program stockpile stewardship mission needs, Flux is a next-generation workload management software framework for supercomputing, high-performance computing (HPC) clusters, cloud servers, and laptops. Flux maximizes scientific throughput by assigning the scientific work requested by HPC users – also known as “jobs” or “workloads” – to available resources that complete the work, a method called scheduling. Using its highly scalable breakthrough approaches of fully hierarchical scheduling and graph-based resource modeling, Flux manages a massive number of processors, memory, graphics processing units (GPUs) and other computing system resources – a key requirement for exascale computing and beyond. Flux has provided innovative solutions for modern workflows for many scientific and engineering disciplines including stockpile stewardship activities, COVID-19 modeling, cancer research and drug design, engineering and design optimization, and large artificial intelligence (AI) workflows. Workload management software such as Flux is critical for HPC users because it enables efficient execution of user-level applications while simultaneously providing the HPC facility with tools to maximize overall resource utilization.

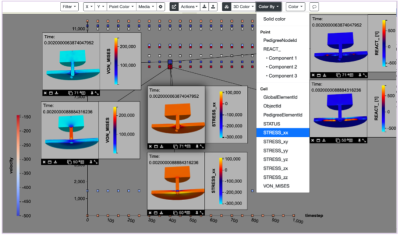

SNL’s ASC-funded Slycat won an R&D 100 Award for its support of ensemble simulation analysis. Slycat is a web-based data analysis and visualization platform that answers critical questions by enabling the analysis of massive, multidimensional, remote data from numerous simulations in a collaborative platform supporting the stockpile stewardship mission, specifically weapon design and qualification. Designers and analysts are now able to evaluate results from a series of related simulation runs using sensitivity analysis, uncertainty quantification, or parameter study computations and visualizations (see example in Figure 1). By looking at groups of runs, higher level patterns can be seen despite variations in the individual runs, enabling weapon analysts and designers to gain insights rapidly on how their choices impact performance. Weapon analysts report that “Slycat’s type of access to model results is unprecedented and allows designers a much more intuitive way to understand complex results as well as find solutions to design problems.” Created at Sandia, Slycat is currently in operation nationally. SNL’s R&D 100 winning entry video is available on YouTube here.

LANL received eight R&D 100 Awards in 2021 – three which were funded by LDRD. Among the three, LANL won an award for its SmartTensors AI Platform in which software uses unsupervised machine learning to sift through massive datasets and identify hidden trends, mechanisms, signatures, and features buried in large high-dimensional data tensors (multi-dimensional arrays). SmartTensors AI Platform also won the “Bronze Special Recognition Award for Market Disruptor – Services” category, which highlights any service from any category as one that forever changed the R&D industry or a particular vertical area within the industry. LANL also won an award for the CICE Consortium, supporting open-source software providing extensive, accurate sea ice modeling across scales. And finally, LANL also won an award for its Earth’s-Field Resonance Detection and Evaluation Device (ERDE) - a portable nuclear magnetic resonance (NMR) spectrometer that employs Earth’s magnetic field and can be used anywhere. ERDE provides rapid, accurate, and safe identification of chemicals. Demonstrated on numerous materials and smaller than a microwave oven, ERDE offers higher resolution signatures than conventional superconducting NMR spectroscopy. To read more about LANL’s other 2021 R&D 100 Award winning technologies, see LANL’s November 9th news release. Please also visit R&D World for the complete list of 2021 R&D 100 Award winners. (DOE/NA-0127)

Improved evaluations for plutonium at LANL incorporate new experimental, theoretical advances.

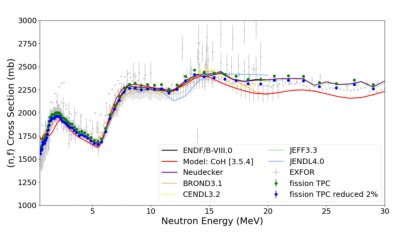

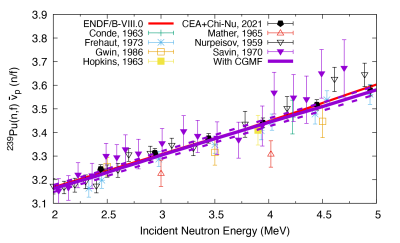

Accurate nuclear data on neutron-induced reactions of 239Pu are crucial to the success of LANL’s core mission as well as many endeavors in nuclear energy, global security, non-proliferation, criticality safety, and more broadly in nuclear defense programs. LANL researchers’ understanding of these important reactions keeps improving through dedicated experimental and theoretical research and development (R&D) efforts. Among them, the fissionTPC and Chi-Nu projects, supported through the NNSA Office of Experimental Sciences (NA-113) for the last decade, have recently produced the most accurate data to-date on fission cross sections and prompt fission neutron spectrum. These data are now being incorporated into the nuclear data evaluation process for the next U.S. library, Evaluated Nuclear Data File (ENDF)/B-VIII.1. The neutron-induced, capture cross section of 239Pu has also been measured recently at the Detector for Advanced Neutron Capture Experiments (DANCE) at the Los Alamos Neutron Science Center (LANSCE) up to ~1 MeV.

On the theory side, the Cascading Gamma-ray Multiplicity Fission (CGMF) event generator, a LANL-developed code, has been used for the first time in a nuclear data evaluation to compute the average prompt fission neutron multiplicity as a function of incident neutron energy. By varying model parameters within reason, LANL was able to reproduce the ENDF/B-VIII.0 evaluation which was based solely on experimental data. This brings the added advantage of computing other correlated fission data consistently, e.g., prompt fission gamma rays. Other experimental advances, implemented in the LANL coupled-channels and Hauser-Feshbach (CoH) nuclear reaction cross section code, include the M1 enhancement in the gamma ray strength function, the Engelbrecht-Weidenmuller transformation applied to the calculation of the inelastic channel, and the implementation of a new collective enhancement factor to allow the simultaneous description of (n,f) and (n,2n) cross sections.

Both experimental and theoretical advances are being incorporated into a new ENDF-formatted data file to be proposed for adoption in the next release (VIII.1) of the U.S. evaluated nuclear data library ENDF/B. An initial validation phase of this new file shows very good agreement with a limited set of critical assembly keff simulations, reaction rates, and Pu pulsed-sphere calculations. More complex validation simulations will be performed soon against unclassified and classified benchmarks. (LA-UR-21-32010)

LLNL ASC Physics and Engineering Models molecular dynamics simulations enhance our understanding of insensitive high explosives modeling for Stockpile Stewardship Program performance and safety analysis.

High-rate strength behavior plays an important role in the shock initiation of high explosives, with plastic deformation serving to localize heat into hot spots and as a mechanochemical means to enhance reactivity. Recent all-atom molecular dynamics (MD) simulations performed at LLNL predict that detonation-like 30 gigaPascal (GPa) shocks produce highly reactive nanoscale shear bands in the insensitive high explosive triaminotrinitrobenzene (TATB) [1], but the thresholds leading to this response are poorly understood. Including this missing physics holds promise to improve the generality of continuum models of TATB-based explosives used in performance and safety analyses.

Large-scale all-atom MD simulations of axial compression were used to predict the strength response of TATB crystal as a function of pressure up to steady detonation values and for a range of crystal orientations to survey the level of anisotropy. Two deformation mechanisms are found to dominate the fine-scale response at all pressures: (i) layer twinning/buckling, and (ii) nanoscale shear banding (see Figure 3). Deformations of molecular shape are postulated to enhance chemical reactivity [2] and are measured in MD simulations using intramolecular strain energy, Uintra. Substantial molecular deformations are generated for all crystal orientations - even those that do not exhibit shear banding - but these conditions are only reached for pressures greater than 10 GPa. This may explain unusual changes in TATB reactivity with increasing shock pressure. Despite this fine-scale complexity, the global response is comparatively straightforward. Significant strain softening follows the onset of yielding, with the flow stress settling on a steady value for most of the orientations considered. The ratio of flow stress, Y, to effective shear modulus, Geff, is essentially constant in pressure (Figure 4). This suggests a plausible route to simplify macroscale strength models, which when coupled to measured intramolecular strain energy distributions, provides grounds for parameterizing a “strength-aware” chemical kinetics model for TATB-based explosives. The molecular dynamics results will be incorporated into macroscale reactive flow models of high explosive performance and safety developed by Physics and Engineering Models (PEM). (LLNL-ABS-831097)

[1] M. P. Kroonblawd and L. E. Fried, “High explosive ignition through chemically activated nanoscale shear bands,” Phys. Rev. Lett. 124, 206002 (2020).

[2] B. W. Hamilton, M. P. Kroonblawd, C. Li, and A. Strachan, “A hotspot’s better half: non-equilibrium intra-molecular strain in shock physics,” J. Phys. Chem. Lett. 12, 2756 (2021).

SNL enables W80-4 normal thermal qualification simulations on the Sierra system.

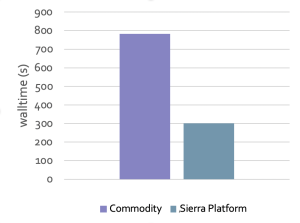

The time it takes for a nuclear weapons analyst to execute computational simulations is an important factor in determining how effectively they can support qualification testing campaigns. ASC researchers at Sandia have delivered to W80-4 analysts the capability to run normal thermal qualification simulations on the Sierra HPC system, which contains GPUs and is the largest computing resource in the NNSA complex (sited at LLNL). GPUs are notoriously difficult to program, and delivering this capability involved reducing the memory required for the thermal radiation physics by over 20% and porting physics algorithms to run on the GPUs. Simulation performance is improved over commodity systems when compared on a node-to-node basis, as shown in Figure 5 (on left). Enabling the Sierra HPC system as a resource for analysts will not only reduce the time it takes to perform simulations, but will also allow them to move their work off much smaller commodity HPC systems, dramatically reducing the time it takes to execute their qualification simulations. (SAND2021-15083 O)

Sandia has developed a data-driven model of porous metal failure to help designers understand reliability of additively manufactured parts for stockpile stewardship.

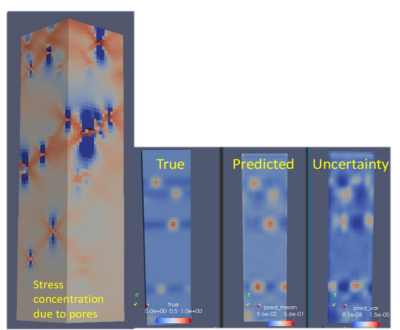

Additive Manufacturing (AM) is an attractive process for producing small batches of parts with complex geometries that are highly optimized to their tasks, which is ideal for stockpile stewardship applications. However, AM parts suffer from significant porosity due to the way they are built, which can lead to part failure. Predicting the reliability of these parts using machine learning approaches applied to scans of the porosity is promising but also challenging. Because failure is a rare event for a well-designed part, performance data generally contains only a few failures, which confounds simplistic machine learning strategies. In response, SNL staff and University of Michigan researchers have developed a machine-learning approach that was more than 85% accurate in predicting ductile failure locations based on scans of the porosity (an example is shown in Figure 6, on the left). Work is ongoing to validate and interpret the uncertainty estimates and generalize the modeling technique beyond porosity to related material failure processes, such as those due to hard inclusions, grain boundaries, and other defects. As the method matures it can be coupled with topology optimization to produce robust, high performance AM parts. (SAND2021-15730 O)

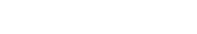

State-of-the-art HPC simulations at LANL unravel mysteries of nuclear fission process dynamics.

HPC simulations help inform production of nuclear data libraries.

Uncertainties in evaluated nuclear fission data are too large for some applications, and an accurate quantitative description of this complex physics process remains elusive to this day. Large simulations of the motion of the protons and neutrons inside the fissioning nucleus can shed some light on various correlated fission data with an unprecedented level of detail. Of particular interest are the characteristics and mechanisms of emission of prompt fission neutrons, such as their energy spectrum, which was recently studied at the Chi-Nu experiment at LANSCE. Another important correlated data quantity is the total kinetic energy carried away by the separated fragments.

A model consisting of a set of 320,000 coupled partial differential equations is used to follow the evolution of a fissioning uranium nucleus, describing the time-dependent interactions among the 236 nucleons forming the original nucleus. This simulation was performed on 2,048 nodes of LLNL’s Sierra supercomputer using the code package LISE (solvers for static and time-dependent superfluid local density approximation equations in 3D), requiring 10 hours of wall-time and being performed almost entirely on GPUs. This software was a product of a collaboration between LANL, University of Washington, and Pacific Northwest National Laboratory (PNNL), and demonstrates how new HPC architectures can be effectively used to solve previously intractable problems.

While the results of this sort of large-scale simulation cannot be used directly in creating nuclear data libraries, such as ENDF/B-VIII.0, they can inform and validate important physics assumptions made in the more phenomenological physics models used to produce such data. For instance, the rigorous and quantitative study of scission neutrons (neutrons emitted during or close to the nucleus neck breakup) and the conditions of creation of the two nascent fragments in terms of excitation energy and angular momentum significantly influence the calculation of the average number and energy spectra of prompt neutrons and photons emitted in the process.

The ability to perform calculations such as these enables the connection of fundamental microscopic physics models to quantities used by application codes, which is seen as a grand challenge in nuclear reaction theory. To fully make this connection however, even larger computations will be required, pushing and testing the limits of current HPC machines. Increasing the spatial extent of the simulation would allow the calculation to assess the later stages of the fission process when the two fragments are fully separated and accelerated, an initial condition for subsequent nuclear reaction codes developed at LANL. (LA-UR-21-30396)

LLNL ASC Physics and Engineering Models team develops and delivers a new baseline equation of state, plus model variations, for tantalum.

The LLNL PEM team has implemented new capabilities into LLNL’s state-of-the-art multiphase equation of state (EOS) generation code, MEOS, that enables: 1) a greatly improved efficiency in EOS generation, so that new data can be incorporated at an accelerated pace, and 2) the ability to rapidly produce model variations for EOS-based uncertainty quantification studies that explore the residual uncertainty. MEOS has been applied to provide new tantalum EOS models supporting high-pressure experiments.

Elemental tantalum (Ta) is widely used within the NNSA complex as a prototypical dense, metallic material in dynamic high-pressure experiments. This is partly because its phase diagram is believed to be quite simple: Only a single solid phase (body-centered cubic) of tantalum is known to be stable even up to many millions of times atmospheric pressure. To both design and interpret these high-pressure experiments, an EOS (which provides a macroscopic description of the interdependence between pressure, internal energy, density, and temperature) must be assumed as an input. Prior to the new PEM improvements, the LLNL-based EOS of choice for tantalum was the LEOS-734 model. Like all other such models, it was constrained by fitting to experimental data and to ab initio theoretical predictions where such data was lacking.

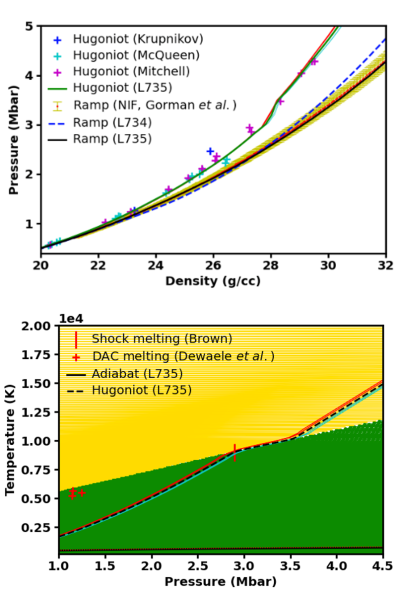

MEOS played a critical role in building a new EOS for tantalum, LEOS-735, that incorporates data from very recent ramp-compression experiments performed at the National Ignition Facility (NIF) and new predictions from first-principles theory. The NIF team produced for the first time a pressure versus density compression curve for this metal up to over 20 million times atmospheric pressure (20 Mbar), constraining the compressibility of the solid phases. LLNL’s density functional theory (DFT)-based molecular dynamics simulation constrained the entropy of the liquid phase. LEOS-735 is now available for use by LLNL experimental designers and analysts and was developed in only a few months: a rapidly accelerated timeframe that would not have been possible without the new capabilities in MEOS.

In addition to creating a new baseline EOS model, LLNL has used the new capabilities within MEOS to produce an expanded set of Ta EOS models: LEOS-735 + variations, which account for the remaining uncertainty in the thermal components of the EOS. Each of the variations to LLNL’s baseline model fits the pressure vs. density shock Hugoniot data and ramp compression data equally well (see Figure 8, top plot), but they differ from each other when plotted in pressure versus temperature format (see Figure 8, bottom plot); this reflects the fact that temperature (T) is not fully constrained in most dynamic experiments. The enhancements to the MEOS code which enabled the rapid construction of model variations are a direct result of recent investments in productivity and workflow. With the delivery of the full set of EOS models, LEOS-735 and LEOS-735 + variations, LLNL arms the designer/analyst with the best estimate of the tantalum EOS and a means to assess the impact of uncertainties on the predictions of key dynamic high-pressure experiments. (LLNL-ABS-831059)

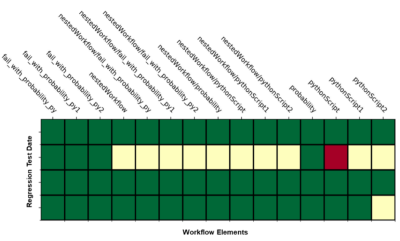

Sandia has prototyped the Engineering Common Model Framework and applied it to the B83 system.

Readily available verified, validated, and reproducible computational engineering models for stockpile systems is an important part of their effectiveness. With such models, simulations can be run as soon as the need arises, enabling analysts to address issues and inform next steps before scheduling expensive and time-consuming experiments. However, sustainment of computational engineering models has been challenged by the complexity of engineering workflows and the continuous evolution and improvement of computational tools and material databases. ASC researchers at Sandia recently created the first repository for the “Engineering Common Model Framework” (ECMF) and are using it to store models for the B83 system. Sandia’s Next-Generation Workflow system was used to convert B83 thermal models into automated graphical workflows that are documented and reproducible. Automated, periodic testing monitors the health of each B83 model. Even as computing resources, analysis codes, and material databases are updated, collocation of credibility evidence with each model allows analysts to assess a model’s readiness for application to stockpile needs. The ECMF also ensures that current and future analysts operate with a common, established technical basis and reduces the amount of time it takes to onboard new weapon analysts. (SAND2021-12799 O)

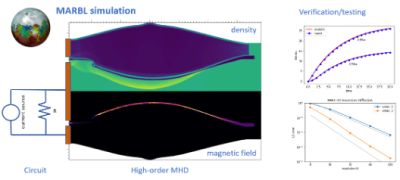

LLNL ASC Multi-physics on Advanced Platforms Project team adds high-order magnetohydrodynamics method and a circuit solver to support simulation needs for experiments.

Accurate high-performance simulation capabilities for magnetized plasmas coupled to circuits are needed for applications in designing pulsed-power and magnetic inertial confinement fusion experiments on platforms like the Sandia Z machine. In addition, high-explosive pulsed power platforms are used in material science experiments at LLNL [A. White et al., “Explosive pulsed power experimental capability at LLNL,” 2012 Int. Conf. on Megagauss Magn. Field Gen.]. LLNL’s next-generation, multi-physics simulation code MARBL has recently gained a resistive magnetohydrodynamics modelling capability, coupling a 3D/2D (in-plane, out-of-plane axisymmetric) high-order arbitrary Lagrangian-Eulerian (ALE) finite-element magneto hydro dynamic (MHD) and multi-port circuit solver to its physics module suite by extending numerical methods [noted in R. Rieben, et al., J. Comp. Phys. 1, 226 (2006); D. White, IEEE Trans. Magn. 41, 10 (2009)]. This new physics module allows MARBL to simulate a wide range of magnetized experiments. (LLNL-ABS-831045)

Sandia LDRD-supported 3D-imaging workflow has benefits for medicine, electric cars, and nuclear deterrence.

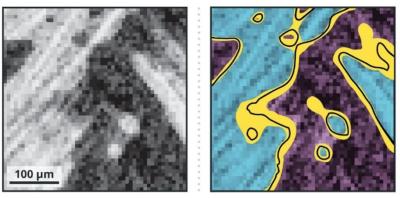

Sandia researchers created a method of processing 3D images for computer simulations that could have beneficial implications for several industries, including health care, manufacturing, and electric vehicles. At Sandia, the method could prove vital in certifying the credibility of HPC simulations used in determining the effectiveness of various materials for weapons programs and other efforts, said Scott A. Roberts, Sandia’s Principal Investigator on the project. Sandia can also use the new 3D-imaging workflow to test and optimize batteries used for large-scale energy storage and in vehicles. “It’s really consistent with Sandia’s mission to do credible, high-consequence computer simulation,” he said. “We don’t want to just give you an answer and say, ‘trust us.’ We’re going to say, ‘here’s our answer and here’s how confident we are in that answer,’ so that you can make informed decisions.”

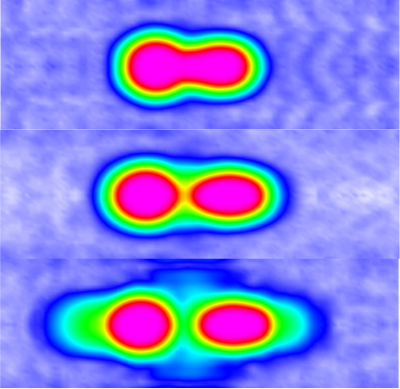

The researchers shared the new workflow, dubbed by the team as EQUIPS (Efficient Quantification of Uncertainty in Image-based Physics Simulation), in a paper published in the journal Nature Communications. “This workflow leads to more reliable results by exploring the effect that ambiguous object boundaries in a scanned image have in simulations,” said Michael Krygier, a Sandia postdoctoral appointee and lead author on the paper. “Instead of using one interpretation of that boundary, we’re suggesting you need to perform simulations using different interpretations of the boundary to reach a more informed decision.” EQUIPS can use machine learning to quantify the uncertainty in how an image is drawn for 3D computer simulations. By giving a range of uncertainty, the workflow allows decision-makers to consider best- and worst-case outcomes, Roberts said.

Think of a doctor examining a computerized tomorgraphy (CT) scan to create a cancer treatment plan. That scan can be rendered into a 3D image, which can then be used in a computer simulation to create a radiation dose that will efficiently treat a tumor without unnecessarily damaging surrounding tissue. Normally, the simulation would produce one result because the 3D image was rendered once, said Carianne Martinez, a Sandia computer scientist. But, drawing object boundaries in a scan can be difficult and there is more than one sensible way to do so, she said. “CT scans aren’t perfect images. It can be hard to see boundaries in some of these images.” One such example is shown in Figure 11 (on right).

Humans and machines will draw different but reasonable interpretations of the tumor’s size and shape from those blurry images, Krygier said. Using the EQUIPS workflow, which can use machine learning to automate the drawing process, the 3D image is rendered into many viable variations showing size and location of a potential tumor. Those different renderings will produce a range of different simulation outcomes, Martinez said. Instead of one answer, the doctor will have a range of prognoses to consider that can affect risk assessments and treatment decisions, be they chemotherapy or surgery. Find more information about the 3D-imaging workflow on the Sandia website. The research, funded by Sandia’s LDRD program, was conducted with partners at Indiana-based Purdue University, a member of the Sandia Academic Alliance Program. Researchers have made the source code and an EQUIPS workflow example available online. (SAND2021-10839E)

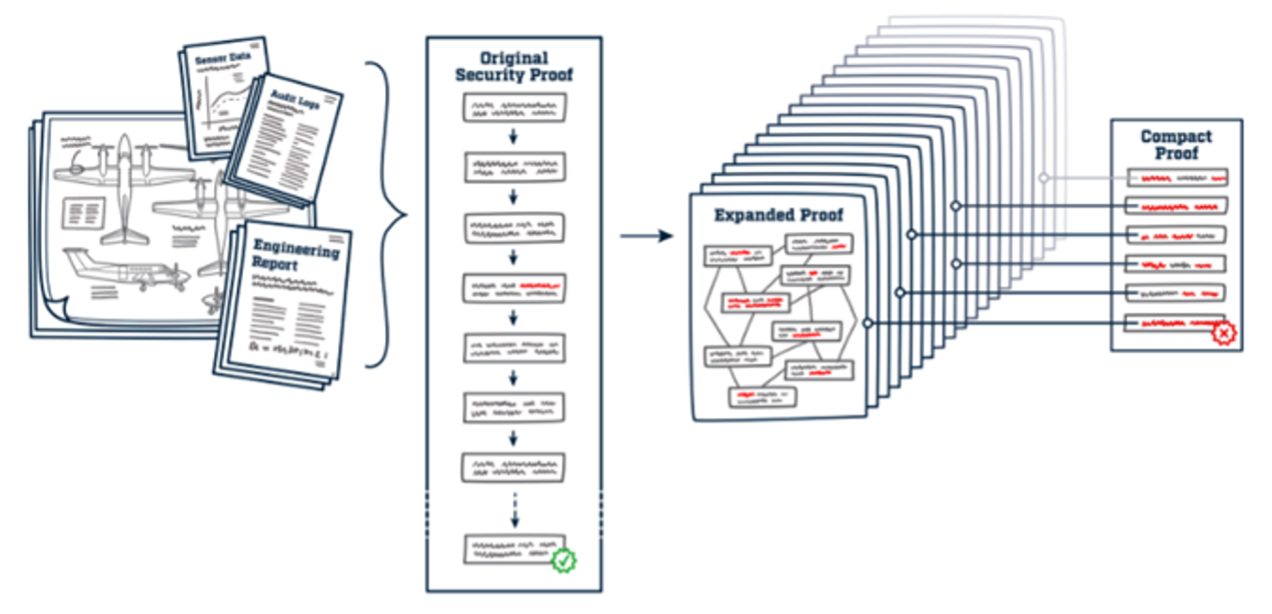

LANL LDRD researchers discover relatively new cryptographic protocol proving the authenticity of digital information without revealing the information itself.

The easiest way to cheat on your math homework is to find the answers somewhere, such as the internet or an answer key. The best way for a teacher to prevent this kind of cheating is to issue the widely dreaded requirement to “show your work.” Showing the work usually reveals whether that work is legitimate and original, but it’s a burden: the teacher must now review every student’s every line of work. This may be a burden some teachers are willing to accept, but what if, instead of the answer to a math problem, one were trying to prove the veracity of satellite imagery (which could have been intercepted and faked)? Or the proper manufacture and assembly of parts for a nuclear weapon (which could have been subtly sabotaged)? Or the output from a computer network that controls critical infrastructure (which could have been hacked)? Or the accuracy of scientific experiments, data, and analysis (which could have been biased)?

In such cases, evidence of tampering, or some other manner of inauthenticity, may be expertly hidden in one small corner of the greater whole. And even if a “show your work” approach were sufficient in theory, finding one incongruous detail in the endless terabytes needed to prove the validity of such complex information would be a nearly hopeless task. Moreover, it would require actually showing—sharing, transmitting, publishing—“your work,” thus putting sensitive information at even greater risk.

The solution to this rather esoteric challenge is something called a “zero-knowledge proof” (ZKP). It’s a technique that cryptographically encodes a small, robust, random sample of what “showing your work” would entail—evidence of a spot check, of sorts, but without including any of the actual work—together with a clever mathematical manipulation designed to ensure that any data tampering, no matter how small or well hidden, is overwhelmingly likely to get picked up. “The math of it has already been worked out,” says Michael Dixon, a scientist in LANL’s Advanced Research in Cyber Systems group.

A proof could take the form of an entire “show your work” explanation. But this extra information is undesirable. It requires the transmission and examination of large amounts of data or back-and-forth interaction.

A better solution is based on an important result in computational complexity theory known as the PCP (probabilistically checkable proof) theorem. The theorem essentially states that if you have a mathematical proof of a particular length, it is possible to reconfigure that proof into a longer one that can be verified, with great accuracy, using only a random sampling of a very small subset of that longer-form proof. That’s the spot check. The resulting cryptographic proof is something called a zk-SNARK: a zero-knowledge, succinct, non-interactive argument of knowledge. Here, the terms “succinct” and “non-interactive” eliminate the cumbersome varieties of ZKP.

With zk-SNARKs, a complicated data product can be passed along between numerous parties - even explicitly distrusted parties - before it reaches its final recipient, and yet that recipient can be assured of the data’s veracity in the end. Dixon refers to this as a “chain of provenance”: at each stage of working with a data product, a new zk-SNARK combines the verification associated with the current stage with the previous zk-SNARK, which verified all prior stages, confirming that the information was processed properly by all the appropriate computational procedures and not touched by anything else.

From a national security perspective, ZKPs have immediate value. The U.S. military, for example, needs to verify that the complex defense systems it purchases from large numbers of private-sector contractors and subcontractors have been built precisely according to approved specifications. They must have properly sourced parts and be correctly assembled, carefully transported, and faithfully deployed—all succinctly and convincingly verified at every step of the way without having to take the whole thing apart or, in the case of nuclear weapons, perform a weapons test, which is prohibited by international treaty. Similarly, complex civilian products involving foreign or domestic private-sector subcontractors can be reliably tracked and verified by sharing only zk-SNARKs and thereby not sharing any proprietary information.

Dixon and his student, Zachary DeStefano, who is entering a doctoral program in computer security, set out to secure output from machine-learning systems called neural networks. Neural networks are complex algorithms that use many nodes, each with parameters that must be learned through experience, to simulate processing by neurons. Dixon and DeStefano’s solution, unsurprisingly, involves ZKPs.

“The most powerful neural-network models are based on datasets much larger than what any one organization can house or efficiently collect,” says DeStefano. “We live in an era of cloud computing, and neural-network computations are often subcontracted out. To validate critical results—or just to make sure you got what you paid for—you need a succinct, non-interactive way to prove that the correct neural network, set up exactly as you specified, processed the inputs you provided and no others.”

Last year, Dixon and DeStefano wrote a software program to do exactly that. It produces a zk-SNARK to validate both the inputs and the specific network execution for a system trained to recognize handwritten numbers. Without supplying a massively cumbersome log of all the scribbles in the dataset, of every single value of every variable the neural network adjusted, of what outputs the countless iterations produced, or of how the network learned to improve its accuracy, the proper processing was nonetheless successfully confirmed.

But with neural networks, there’s another concern: their training. A ZKP is needed to account for the authenticity of the training set originally supplied to the neural network, which, often, the customer has no part in. Dixon and DeStefano are working on that now.

“We’re working to help a distributed system for crowd-sourced training data audit itself,” Dixon says. “The idea is to delegate neural-network training to the edge nodes, such as hospitals, and require them to furnish proofs that they did their jobs correctly.” The neural network still seeks to improve its parameters through iteration, but instead of asking edge nodes for patient data explicitly, it only asks in what direction and by how much to shift the current-iteration parameters to achieve an improvement. Earlier research proved that the edge nodes can perform that computation, and Dixon and DeStefano are now demonstrating that zk-SNARKs can verify that the edge nodes did (or didn’t do) exactly what they were supposed to. The result is a perfectly reliable neural network computation and a hugely valuable medical resource constructed from enormous quantities of verified patient data that were never actually shared. And by virtue of the zk-SNARKs, the system automatically recognizes and eliminates training information derived from faulty patient data, whether the error comes from a mistake or deliberate sabotage.

Eventually, Dixon hopes to see specific hardware designed to produce inherently self-verified data for neural-network training and general computation. Data would be quantum mechanically guaranteed and cryptographically certified upon creation, with subsequent zk-SNARKs generated as needed to verify the proper processing and the absence of tampering.

“Where we are now - verifying inputs and execution, securing data sources and supply chains - is just the beginning,” says Dixon. “In a world concerned with massive-scale misinformation, it’s difficult to overstate the public good that will ultimately come from this technology.”

Find more information about zk-SNARK on the LANL website. Work supported by the LDRD program was funded via project 20210529CR Information Science and Technology Institute (ISTI): Foundational Research in Information Science and Technology, and 20180719CR Rapid Response: Novel Computing. (LA-UR-21-27618)

Questions? Comments? Contact Us.

NA-114 Office Director: Thuc Hoang, 202-586-7050

- Integrated Codes: Jim Peltz, 202-586-7564

- Physics and Engineering Models/LDRD: Anthony Lewis, 202-287-6367

- Verification and Validation: David Etim, 202-586-8081

- Computational Systems and Software Environment: Si Hammond, 202-586-5748

- Facility Operations and User Support: K. Mike Lang, 301-903-0240

- Advanced Technology Development and Mitigation: Thuc Hoang